Latest News

All-hands Meeting

The NSF EPSCoR RII Symposium took place on Monday, May 11, 2009 in Baton Rouge, Louisiana. The symposium featured four 'anchor' presentations on CyberTools/Science Driver interactions, a poster competition, an outreach/education session, and plenty of opportunities for networking. Find the Symposium's presentations and posters here.

[Other News]

CyberTools Software

CyberTools will include services and tools for data-sharing, on-demand computing and dynamic scheduling, dynamic data-driven application systems, metadata catalogues and decision systems as well as portals, visualization tools and application toolkit. Initial applications driving CyberTools come from bioinformatics, computational fluid dynamics and molecular dynamics. CyberTools builds on numerous existing software projects, many of which are led by researchers in Louisiana.

Project Software Development

|

ADAPTAdaptive Data Partitioning Toolkit. ADAPT is a collection of unsupervised adaptive and non-adaptive data partitioning methods, such as equi-width partitioning, equi-depth partitioning, proportional K-interval partitioning, weighted K-interval partitioning, and clustering of K-interval partitioning. The tool also includes a new data adaptive partitioning methods developed in the Data Mining Laboratory at Louisiana Tech University. ADAPT also provides a way to quantitatively evaluate and compare partitioning methods. |

|

AIMSAssociative Image Classification System. AIMS is an automated image search, retrieval and classification engine. It enables the user to perform content-based image retrieval and image classification using weighted isomorphic relationships. Query can be launched by choosing an image already present in the database or by giving a completely new image from the web. AIMS also provide the user with the functionality of using raw features instead of weighted rules for querying in order to see comparison among different classification techniques. Current version of AIMS supports querying with both natural and medical images. |

|

Cactus CodeThe Cactus Framework is an open source, modular, portable, programming environment for collaborative HPC computing. The Cactus Framework allows large-scale cooperation across the globe, where individual groups design and maintain individual code modules, relying on Cactus to make these modules interoperate. Cactus is used in CyberTools for the CFD, Black Oil, Coastal and Numerical Toolkits, and has active projects with PetaShare, SAGA, EAVIV, Vish, tangible devices and grid portals. |

|

CarpetAdaptive Mesh Refinement for the Cactus Framework. Carpet is an adaptive mesh refinement and multi-pach driver for the Cactus Framework. Cactus is a software framework for solving time-dependent partial differential equations on block-structured grids, and Carpet acts as driver layer providing adaptive mesh refinement, multi-patch capability, as well as parallelisation and efficient I/O. |

|

CFD ToolkitThe CFD Toolkit is an on-going research initiative at the Center for Computation & Technology (CCT), which is building a collaborative problem-solving environment for grand challenge fluid flow and transport problems. The CFD Toolkit is built using the Cactus framework and currently is developing modules to integrate a multiblock stirtank code with the Biotransport group and a boundary element method for complex flow with the Immunosensor group. |

|

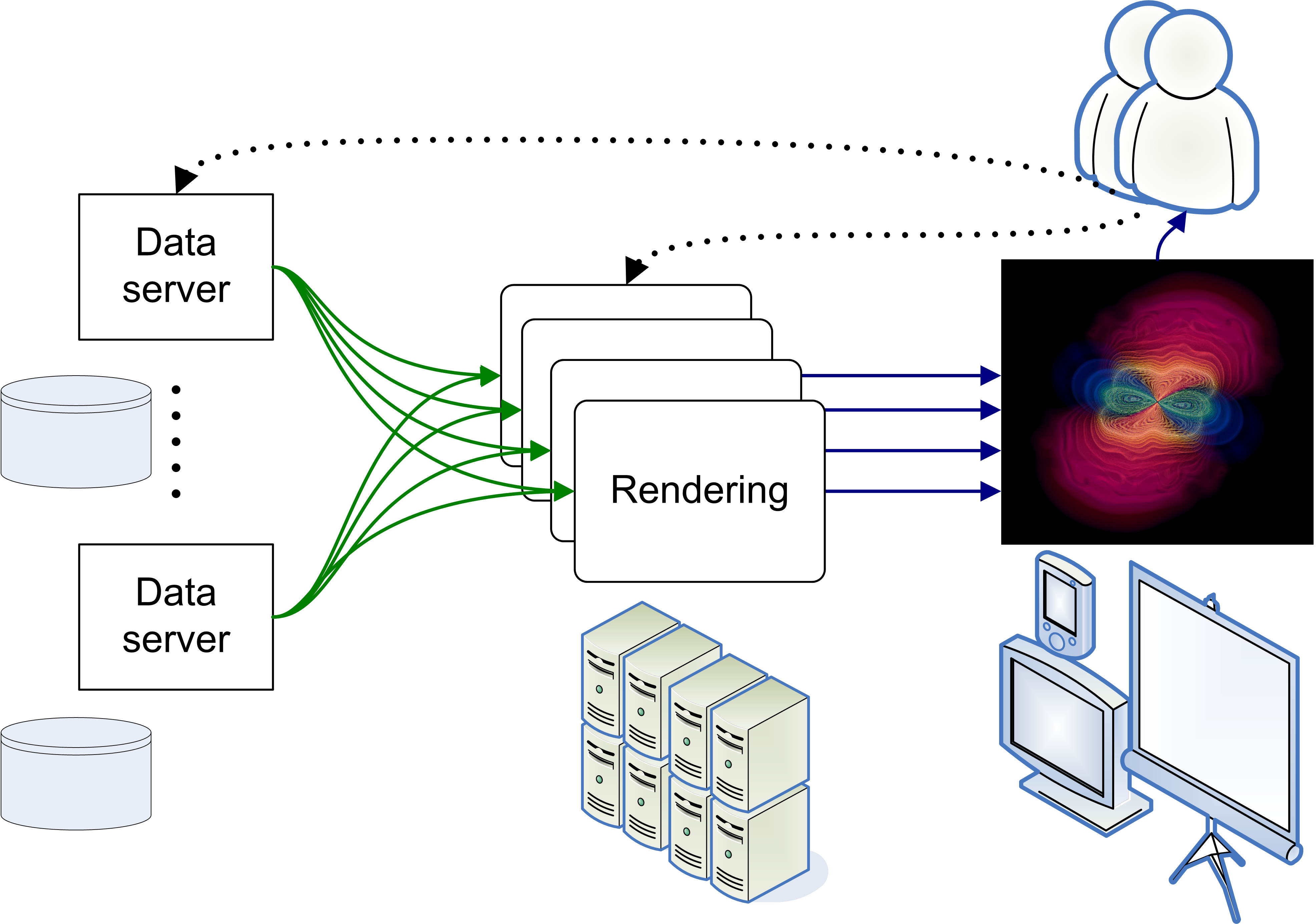

EAVIVThe EAVIV system is a research environment for experimenting with distributed visualization scenarios on high speed networks. The software components making up EAVIV comprises a distributed, efficient data server, a parallel GPU-based renderer (GeRender), and UDP-based image streaming to high resolution displays. Using visualization scenarios from the CyberTools science drivers, EAVIV is being used across LONI to investigate interactive visualization of large scale data sets. |

|

F5F5 is a library that provides a simple C-API for writing HDF5 files using the concept of fiber bundles. F5 provides a common model to cover a wide range of data types used for scientific computing in a mathematically founded and systematic approach. The library includes file converters from common data output formats. In CyberTools the F5 file format is used as a common base for the varied data from the diverse science drivers, enabling applications to easily share data and visualization tools, and benefit from improvements in the I/O layers. |

|

HARCHighly-Available Resource Co-allocator. HARC is a system for reserving multiple resources in a coordinated fashion. HARC can handle multiple types of resource, and has been used to reserve time on supercomputers distributed across a US-wide testbed, together with dedicated 10 Gb/s lightpaths connecting the machines. |

|

P3MapsProtein Physico-chemical Property Maps. P3Maps provides an understanding of the sequence and physico-chemical relationship and paves the way for users to identify local sequence property modulations that impact protein function without changing the protein structure. It provides users a means to analyze multiple sequences (DNA or Protein) using existing tools of Clustal W and phylogenetic trees in high-performance computing environments. Its features provide users to analyze conserved domains using data mining techniques of prediction. |

|

PC4Protein Core Calculation, Conformation and Classification. PC4 is a graph based data mining tool, provides users a way to analyze the structure of proteins. The tool features enable users to extract and isolate protein structural units of sustained invariance among evolutionary related proteins, by integrating physico-chemical properties over the 3-D structure of a protein. The tool features data mining classification algorithms for classification and prediction and connects to known datasets and databases, such as the PDB and SCOP. |

|

PetaSharePetaShare will enable transparent handling of underlying data sharing, archival, and retrieval mechanisms; and will make data available to the scientists for analysis and visualization on demand. PetaShare will respond to an urgent need of scientists who are working with large data generation, sharing and collaboration requirements. In CyberTools PetaShare is being used to provide collaborative, large scale, distributed storage for most of the application teams, where ongoing activities are developing community archives and metadata systems. |

|

SAGASimple API for Grid Applications. SAGA provides an application-level programming abstraction for distributed environments. It provides a simple and consistent programmatic interface to the most commonly required Grid functionality. SAGA is a soon to become OGF technical specification thus ensuring that it is stable, widely adopted and available. In CyberTools SAGA is being used to develop application manager tools in different areas, and is being integrated with the Cactus framework to enable different distributed application scenarios. |

SimFactoryHerding Numerical Simulations. The simulation factory contains a set of abstractions of the tasks which are necessary to set up and successfully finish numerical simulations using the Cactus framework. These abstractions hide tedious low-level management tasks, they capture "best practices" of experienced users, and they create a log trail ensuring repeatable and well-documented scientific results. Using these abstractions, many types of potentially disastrous user errors are avoided, and different supercomputers can be used in a uniform manner. |

|

|

StorkStork is a batch scheduler specialized in data placement and data movement, which is based on the concept and ideal of making data placement a first class entity in a distributed computing environment. Stork implements teniques specific to queueing, scheduling, and optimization of data placement jobs, and provides a level of abstraction between the user applications and the underlying data transfer and storage resources. Stork is an ongoing joint project between Louisiana State University and University of Wisconsin-Madison. Stork is used in CyberTools as part of workflow systems in coastal modeling, petroleum engineering, astrophysics and bioinformatics to schedule data transfers across LONI and associated sites. |

|

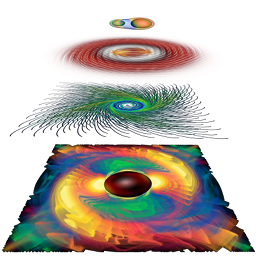

VISHVISH is a highly modular infrastructure to implement visualization algorithms. It provides strong encapsulation between components and abstract interfaces that allow to integrate VISH components into existing applications. It is not a visualization application per se, but comes with a standalone reference implementation of a GUI (qVISH, based on QT). Special attention is given to interactive exploration of large dataset, whereby state-of-the art feauture of modern graphics cards are exploited. Vish is used in CyberTools to provide advanced visualization for complex multiblock and adaptive mesh refinement data, and to develop new visualization algorithms for identifying features such as streamlines or pathlines. |

Supporting Software

|

GridSphereThe GridSphere portal framework provides an open-source portlet based Web portal. GridSphere enables developers to quickly develop and package third-party portlet web applications that can be run and administered within the GridSphere portlet container. In CyberTools GridSphere is being used to develop grid portals for applications in CFD and Black Holes. |

|

SAGEScalable Adaptive Graphic Environment. SAGE is a system enabling high-definition videos and very high-resolution graphics to be streamed in real-time from remotely distributed rendering and storage clusters to scalable display screens over ultra-high speed networks. With SAGE, multiple visualization applications can be streamed to large displays and viewed at the same time. The application window can be dynamically configured like any standard desktop window manager. It creates an opportunity to collaborate between local and distant researchers on projects involving large amounts of distributed heterogeneous datasets. SAGE is being deployed in CyberTools to provide image streaming to high resolution displays. |

|

SPRUCESPRUCE is a system to support urgent or event-driven computing on both traditional supercomputers and distributed Grids. Scientists are provided with transferable Right-of-Way tokens with varying urgency levels. During an emergency, a token has to be activated at the SPRUCE portal, and jobs can then request urgent access. Local policies dictate the response, which may include providing "next-to-run" status or immediately preempting other jobs. |

|

VineVine is a modular, extensible Java library that offers developers an easy-to-use, high-level Application Programmer Interface (API) for Grid-enabling applications. Vine can be deployed for use in desktop, Java Web Start, Java Servlet and Java Portlet 1.0 environments with ease. Plus Vine supports a wide array of middleware and third-party services, so you can focus on your applications and not lose focus on the Grid! |